When teaching about AI Vision sensors, many learners naturally ask: What exactly is the AI Vision sensor seeing in real time? To address this, we have developed two solutions for VEX AIM that provide immediate visual feedback to learners.

AI Vision Dashboard on VEX AIM

AI Vision Dashboard on VEX AIM

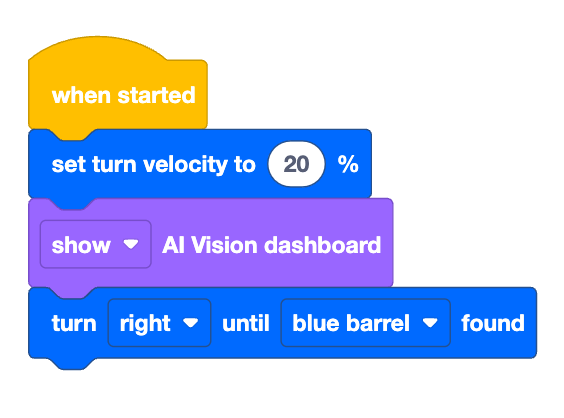

To make AI Vision processing more transparent, VEX AIM includes an AI Vision Dashboard command that displays real-time data on the screen of the robot. By using the command show AI Vision dashboard, the system displays the sensor’s detections directly on the screen. This allows learners to see the detected objects, their positions, and classifications as the robot moves.

In the example above, I have reduced the turn velocity to 20% for better visualization. This slower movement makes it easier to observe how the AI Vision sensor processes visual inputs in real time.

Here, you can see how the AI Vision sensor identifies objects and displays markers on the dashboard, helping learners understand how AI-driven perception works in a dynamic environment.

Live Streaming Data to VEXcode

Live Streaming Data to VEXcode

Another feature we developed is the real-time AI Vision data streaming directly into VEXcode AIM. When you connect your robot and navigate to the monitor tab, the software streams the AI Vision sensor data, generating a dynamic view of what the AI Vision sensor detects.

This feature provides an accessible way for learners to observe AI Vision data in real time. The generated view also includes additional sensor data, giving learners the information needed to debug their code.

By integrating these features, we are creating a more scaffolded learning experience, helping learners to learn more about AI Vision concepts and understand how robots perceive and interact with their environments.